This is the third post of the tutorial series called Node Hero - in these chapters you can learn how to get started with Node.js and deliver software products using it.

In this chapter, I’ll guide you through async programming principles, and show you how to do async in JavaScript and Node.js.

Upcoming and past chapters:

- Getting started with Node.js

- Using NPM

- Understanding async programming [you are reading it now]

- Your first Node.js server

- Node.js Database Tutorial

- Node.js request module tutorial

- Node.js project structure tutorial

- Node.js authentication using Passport.js

- Node.js unit testing tutorial

- Debugging Node.js applications

- Node.js Security Tutorial

- How to Deploy Node.js Applications

- Monitoring Node.js Applications

Synchronous Programming

In traditional programming practice, most I/O operations happen synchronously. If you think about Java, and about how you would read a file using Java, you would end up with something like this:

try(FileInputStream inputStream = new FileInputStream("foo.txt")) {

Session IOUtils;

String fileContent = IOUtils.toString(inputStream);

}

What happens in the background? The main thread will be blocked until the file is read, which means that nothing else can be done in the meantime. To solve this problem and utilize your CPU better, you would have to manage threads manually.

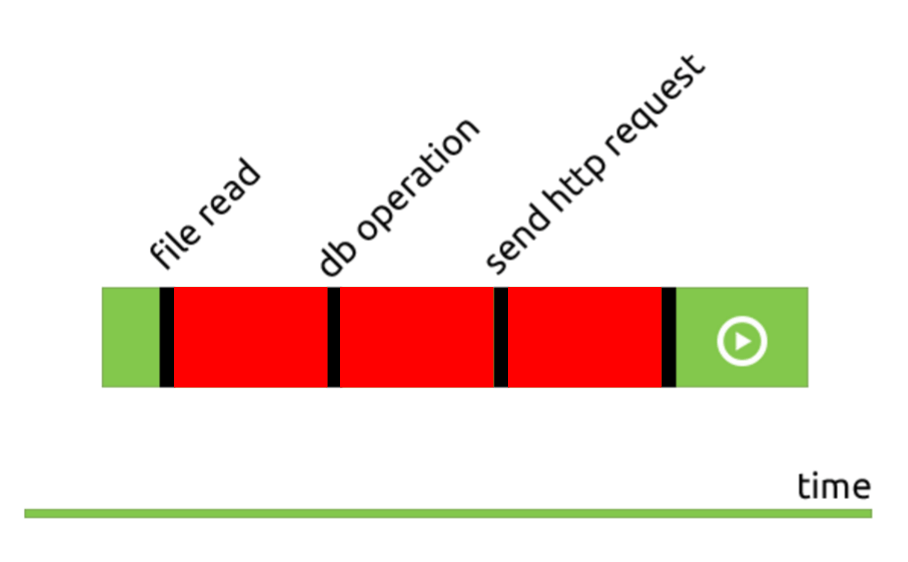

If you have more blocking operations, the event queue gets even worse:

(The red bars show when the process is waiting for an external resource's response and is blocked, the black bars show when your code is running, the green bars show the rest of the application)

(The red bars show when the process is waiting for an external resource's response and is blocked, the black bars show when your code is running, the green bars show the rest of the application)

To resolve this issue, Node.js introduced an asynchronous programming model.

Asynchronous programming in Node.js

Asynchronous I/O is a form of input/output processing that permits other processing to continue before the transmission has finished.

In the following example, I will show you a simple file reading process in Node.js - both in a synchronous and asynchronous way, with the intention of show you what can be achieved by avoiding blocking your applications.

Let's start with a simple example - reading a file using Node.js in a synchronous way:

const fs = require('fs')

let content

try {

content = fs.readFileSync('file.md', 'utf-8')

} catch (ex) {

console.log(ex)

}

console.log(content)

What did just happen here? We tried to read a file using the synchronous interface of the

fs module. It works as expected - the content variable will contain the content of file.md. The problem with this approach is that Node.js will be blocked until the operation is finished - meaning it can do absolutely nothing while the file is being read.

Let's see how we can fix it!

Asynchronous programming - as we know now in JavaScript - can only be achieved with functions being first-class citizens of the language: they can be passed around like any other variables to other functions. Functions that can take other functions as arguments are called higher-order functions.

One of the easiest example for higher order functions:

const numbers = [2,4,1,5,4]

function isBiggerThanTwo (num) {

return num > 2

}

numbers.filter(isBiggerThanTwo)

In the example above we pass in a function to the filter function. This way we can define the filtering logic.

This is how callbacks were born: if you pass a function to another function as a parameter, you can call it within the function when you are finished with your job. No need to return values, only calling another function with the values.

These so-called error-first callbacks are in the heart of Node.js itself - the core modules are using it as well as most of the modules found on NPM.

const fs = require('fs')

fs.readFile('file.md', 'utf-8', function (err, content) {

if (err) {

return console.log(err)

}

console.log(content)

})

Things to notice here:

- error-handling: instead of a

try-catchblock you have to check for errors in the callback - no return value: async functions don't return values, but values will be passed to the callbacks

Let's modify this file a little bit to see how it works in practice:

const fs = require('fs')

console.log('start reading a file...')

fs.readFile('file.md', 'utf-8', function (err, content) {

if (err) {

console.log('error happened during reading the file')

return console.log(err)

}

console.log(content)

})

console.log('end of the file')

The output of this script will be:

start reading a file...

end of the file

error happened during reading the file

As you can see once we started to read our file the execution continued, and the application printed

end of the file. Our callback was only called once the file read was finished. How is it possible? Meet the event loop.The Event Loop

The event loop is in the heart of Node.js / Javascript - it is responsible for scheduling asynchronous operations.

Before diving deeper, let's make sure we understand what event-driven programming is.

Event-driven programming is a programming paradigm in which the flow of the program is determined by events such as user actions (mouse clicks, key presses), sensor outputs, or messages from other programs/threads.

In practice, it means that applications act on events.

Also, as we have already learned in the first chapter, Node.js is single-threaded - from a developer's point of view. It means that you don't have to deal with threads and synchronizing them, Node.js abstracts this complexity away. Everything except your code is executing in parallel.

To understand the event loop more in-depth, continue watching this video:

Async Control Flow

As now you have a basic understanding of how async programming works in JavaScript, let's take a look at a few examples on how you can organize your code.

Async.js

Async.js helps to structure your applications and makes control flow easier.

Let’s check a short example of using Async.js, and then rewrite it by using Promises.

The following snippet maps through three files for stats on them:

async.parallel(['file1', 'file2', 'file3'], fs.stat, function (err, results) {

// results is now an array of stats for each file

})

Promises

The Promise object is used for deferred and asynchronous computations. A Promise represents an operation that hasn't completed yet but is expected in the future.

In practice, the previous example could be rewritten as follows:

function stats (file) {

return new Promise((resolve, reject) => {

fs.stat(file, (err, data) => {

if (err) {

return reject (err)

}

resolve(data)

})

})

}

Promise.all([

stats('file1'),

stats('file2'),

stats('file3')

])

.then((data) => console.log(data))

.catch((err) => console.log(err))

Of course, if you use a method that has a Promise interface, then the Promise example can be a lot less in line count as well.

No comments:

Post a Comment